Microsoft: Physical AI & Azure IoT

From Azure to the Factory Floor: Microsoft's Physical AI Journey with Universal Robots.

Technology

Universal Robots

Azure IoT

Houston MTC

Powering Microsoft's Physical AI: How UnderAutomation Bridges the Gap to the Intelligent Edge

At the Microsoft Technology Center (MTC) in Houston, the future of Industry 4.0 is not just being imagined, it is being built. In this hub of industrial innovation, Microsoft researchers and engineers are pioneering Physical AI, a discipline that fuses advanced cloud computing with the tangible reality of robotics.

To bridge the critical gap between high-level AI reasoning in Azure and the precise, real-time control required by Universal Robots (UR), Microsoft relies on the UnderAutomation Universal Robots Communication SDK.

The Vision: Advancing Intelligent Embodiments

Microsoft's research focuses on advancing intelligent embodiments. The goal is enabling AI systems that integrate perception, reasoning, and control, enabling adaptive and autonomous interaction in dynamic environments. This is a departure from traditional, pre-programmed automation; it requires robots that can "see," "think," and "act" in real-time.

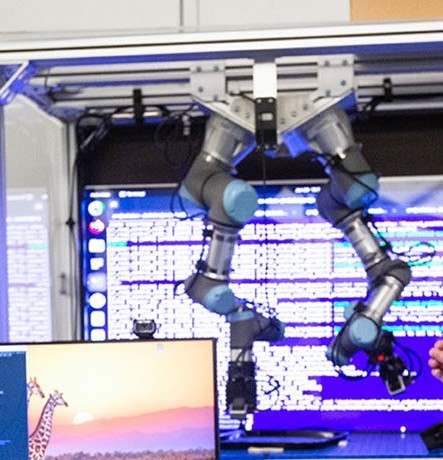

Caption: Researchers collaborate on a bimanual coordination, analyzing real-time data and visual feedback to refine AI algorithms.

The Challenge: Connecting .NET to the Factory Floor

For the researchers at the MTC, the challenge was connectivity. Microsoft's AI and "Intelligent Edge" solutions are natively built on the .NET ecosystem. However, industrial robots typically speak their own proprietary languages. To create a seamless, closed-loop system where Azure AI could direct a robot's movements on the fly, they needed a communication layer that was:

- Native to .NET: To integrate effortlessly with Azure IoT and AI services.

- Fast: Capable of high-frequency data exchange for real-time control.

- Reliable: robust enough for 24/7 industrial demonstrations.

The Solution: UnderAutomation SDK

Microsoft chose the UnderAutomation Universal Robots SDK as the backbone for their robotic cells. By providing a 100% managed .NET library, UnderAutomation eliminated the need for complex middleware or external wrappers.

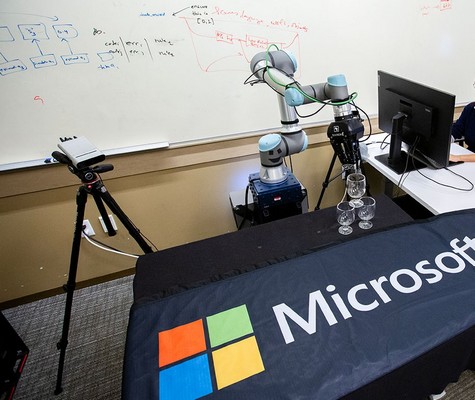

Caption: Demonstrating a collaborative robotic arm in action, highlighting real-time AI integration for advanced control and planning at Microsoft Research.

Why It Matters

Using UnderAutomation, Microsoft engineers could focus on the intelligence of the system rather than the plumbing. The SDK utilizes native UR protocols (RTDE, Dashboard Server) to send commands and receive feedback at rates sufficient for closed-loop control. This allows the AI to adjust the robot's trajectory in milliseconds based on sensor input.

Real-World Application: Interactive Object Handling

One of the most compelling demonstrations involves interactive object handling. By leveraging transformer models on platforms like "Seedonoid," Microsoft showcases how robots can handle varying objects with dexterity previously thought impossible for standard cobots.

Caption: Leveraging transformer models on Seedonoid for interactive object handling, showcasing Microsoft Research's advancements in AI-guided robotics.

In these scenarios, the UnderAutomation SDK is the invisible thread connecting the "brain" (Azure AI models) to the "hand" (the Universal Robot). It translates complex inference results into precise motor commands, proving that the future of the factory floor is software-defined.

Conclusion

The collaboration at the Houston MTC serves as a blueprint for the future of manufacturing. By combining Microsoft's leadership in AI with the accessible precision of Universal Robots, and tying it all together with UnderAutomation, industries can look forward to a new era of smarter, safer, and more adaptable production lines.

Usefull links

お客様の成功事例

当社のお客様が UnderAutomation ソリューションを産業プロジェクトにどのように統合しているかをご覧ください。